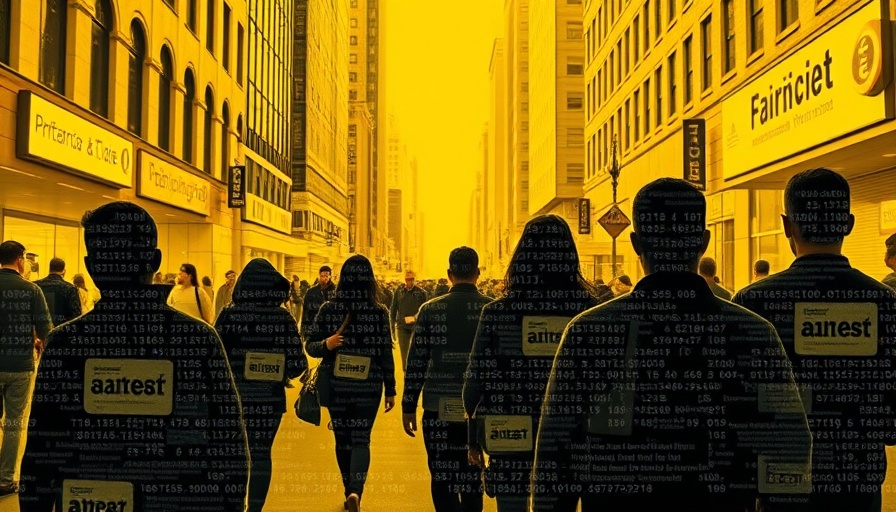

Amsterdam's Ambition: A Fair Welfare AI System

In a move that’s capturing the attention of policymakers and technologists worldwide, Amsterdam's welfare department embarked on an ambitious AI experiment aimed at reshaping the landscape of social support systems. With hopes of creating technology that could prevent welfare fraud while simultaneously upholding the rights of its citizens, local officials dedicated substantial resources to this pilot project, envisioning a fair and efficient process. However, the initial results revealed a harsh reality; the AI system designed to serve its community fell short of its goals, raising questions about the effectiveness of AI in critical social services.

Unpacking the Challenges of Welfare AI

A key takeaway from the reports by Lighthouse Reports and MIT Technology Review is that the complexities of human need and bureaucratic processes can be difficult for AI to navigate. The challenge hinged not solely on technological limitations but also on persistent biases within the data used to train these systems. As city officials sought guidance from emerging global best practices, the pilot revealed that even well-meaning intentions could lead to inequitable outcomes if underlying data biases are not adequately addressed. This realization marks a crucial point for other cities considering similar implementations: ensuring fairness in AI requires continual engagement and oversight.

What Can AI Learn from Human Judgments?

As part of the experiment, Amsterdam officials recognized that perhaps the fairest determinant of welfare eligibility should remain human-driven. In line with this, interactive tools like courtroom algorithms, which aim to measure the fairness of machine decisions against human judgments, were highlighted. As citizens began to question whether machines can surpass human discernment, a broader discussion emerged about the potential for hybrid systems that blend human insight and AI efficiency.

Safety First: The Imperative of Robot Regulations

Another vital conversation discussed in this edition revolves around humanoid robots and their burgeoning role in industrial applications. As the robotics industry inches closer to having robots collaborate with humans in everyday environments, the need for robust safety standards becomes paramount. Without these guidelines, the risks to human life and safety could be unacceptably high. While humanoid robots are designed to integrate into human-centric spaces, ensuring their safety is complex and necessitates the establishment of specific regulations that protect workers and the public alike.

Global Implications: Insights from Amsterdam's Experiment

The developments in Amsterdam are particularly timely, given our global society's increasing reliance on AI and robotics. Countries like Japan, which has long embraced robotics for labor markets facing demographic challenges, might look towards Amsterdam for guidance on balancing innovation while securing ethical standards. These considerations can lead to inspiring dialogues about how cities around the world can navigate similar AI challenges.

A Look Towards the Future: Potential Trends

As we witness the expansion of AI and robotics, a multitude of trends will likely define how we approach these technologies. The debate surrounding the ethical implications of automated decision-making will drive further research into bias mitigation. Concurrently, as robots enter spaces traditionally occupied by humans, the demand for cooperative safety frameworks will become more pronounced. Ultimately, these factors could pave the way for an era of greater transparency and accountability in AI use.

Conclusion: Conversations That Matter

Amsterdam's welfare AI experiment and regulations for humanoid robots are not just local issues; they provoke questions relevant to societies everywhere. As we learn from successes and pitfalls alike, understanding the complexities of fairness in AI—even as we invite robots into our workplaces—will be critical for a successful technological future.

Add Row

Add Row  Add

Add

Add Element

Add Element

Write A Comment